rLLM:Relational Table Learning with LLMs

Motivation Large Language Models (LLMs) are becoming an essential part of the AI landscape. However, in the face of real-world big data, their applications would come with unaffordable costs. As relational databases host nearly 80% of the data, it is crucial to explore the efficient utilization of LLMs within relational tables.

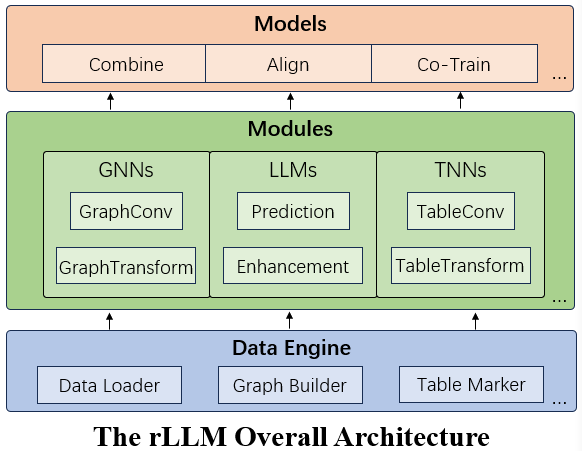

Our solution rLLM (relationLLM) is an easy-to-use Pytorch library for Relational Table Learning (RTL) with LLMs. It performs two key functions: 1) breaking down state-of-the-art GNNs, LLMs, and TNNs as standardized modules. 2) enabling the construction of novel models in a "combine, align, and co-train" way with these decomposed modules.

- Paper (Slides): https://arxiv.org/abs/2407.20157.

- Code: https://github.com/rllm-project/rllm.